Viewfinder, Precision Targets shown at HASTAC II Conference

Digital Humanities, Events, Flex, Viewfinder,

6/6/08

HASTAC II, the second annual conference of the Humanities, Arts, Science and Technology Advanced Collaboratory, was held at UC Irvine and UCLA on May 22-24. The theme? “Technotravels.” Unfortunately scheduling conflicts prevented me from checking out many of the sessions (would love to have seen Brenda Laurel’s provocation, as her book Computers as Theatre was an early inspiration for me), but happily I was able to attend Curtis Wong’s presentation entitled “From Beethoven to Betelgeuse, 20 Years in the Quest for the Holy Grail of Interactive Storytelling.” It was great getting to hear about what Curtis has been up to since the Voyager days, and to get an introduction to his latest project, Worldwide Telescope—a kind of Google Earth for the sky that seamlessly integrates astrophotography from a variety of sources into an experience with lots of hooks for user-generated content.

The scene at HASTAC II; getting the Viewfinder presentation set up on the HIPerWall.

I presented two projects at HASTAC II, the first of which was Viewfinder. The presentation was done on UC Irvine’s HIPerWall, the extremely high resolution display consisting of 50 30-inch Apple Cinema Displays linked together. I was able to distribute the presentation materials (slides, two videos, the Viewfinder web interface, and Google Earth itself) across the width of the screen, and while we weren’t running at the native resolution of the display, it was still pretty cool to be able to play with a visual field of that size. During the show I was able to give a look at the evolution of the Viewfinder web application UI since our initial release—in this version, we had the complete workflow running as a Flex application using the new Flash Google Maps API released the week before. The first session was very well attended, and I’m told there was some lively discussion afterwards. Thanks to David Theo Goldberg for inviting me to present on the HIPerWall, and Sung-Jin Kim for invaluable help with the presentation logistics.

Caren Kaplan and I presenting Precision Targets at HASTAC II.

The following day, Caren Kaplan and I presented our upcoming piece Precision Targets as part of the demo sessions at UCLA. Precision Targets combines six narratives about GPS and its movement from military to civilian use in a comic-book-inspired format (featuring art by Ezra Claytan Daniels) that places the narratives inside a navigable 3D cube with commentary written by Caren. The work was very well received—we got a lot of great feedback that we aim to translate into momentum to complete the project in the next few months.

Next up: A report on the Electronic Literature Organization conference in Vancouver, Washington…

Viewfinder: Your photos, seamlessly aligned with Google Earth

Announcements, Flash, Flex, Viewfinder,

4/4/08

Over the past six months I’ve had the privilege of working with an outstanding group of folks from USC’s Interactive Media Division and the Institute for Creative Technologies on a new project called Viewfinder, directed by Michael Naimark. It’s a departure from my usual work in that it’s more of a pure research project—the goal being to make it easy for people to place their photographs into a 3D world model like Google Earth so the the image is perfectly aligned with the model. We launched the piece this week with a website, demo video, and coverage on the NY Times Bits technology blog.

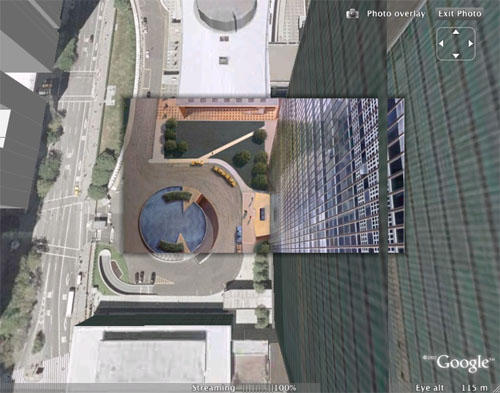

As part of the project, we developed a browser-based 2D method for lining up a photograph with a Google Earth screen shot and then doing the necessary calculations to correctly “pose” the photo in Google Earth in 3D. This involved a Google Maps/Earth mashup developed by Will Carter that allows you to pick a point on the earth in Google Maps and see the resulting location in Google Earth (a navigation method which turns out to be much easier than trying to move around at ground level in Google Earth itself).

The second part of the 2D method was a Flex application I developed that allows you to drop a photo on top of the Google Earth image and alter its scale and position until the two are aligned as closely as possible. Some trigonometry is then applied to generate the KML code that correctly places the photo in Google Earth. Once we got the workflow up and running, it was pretty interesting to try posing different kinds of images—my personal favorite was the high angle matte painting of the United Nations building from Hitchcock’s North by Northwest (see below). It’s amazing to see how closely the painting matches the Google Earth image (especially considering the angle). I’ve posted a few more stills from the project as well.

If you check the “Results” area of the website, you’ll see that we also developed a proof-of-concept for a 3D posing method in which the user drags 3D geometry around to match the photo while an algorithm interactively solves for the correct pose. This is hardcore computer science stuff and it was great to see the folks from ICT put this together. A fascinating experience overall.

Recent Posts

Go InSight: Composing a Musical Summation of Every Mission to Mars (Part 2)

Making music out of the data of interplanetary exploration.

Go InSight: Composing a musical summation of every mission to Mars (Part 1)

Making music out of the data of interplanetary exploration.

Cited Works from “Storytelling in the Age of Divided Screens”

Here’s a list of links to works cited in my recent talk “Storytelling in the Age of Divided Screens” at Gallaudet University.

Timeframing: The Art of Comics on Screens

I’m very happy to announce the launch of “Timeframing: The Art of Comics on Screens,” a new website that explores what comics have to teach us about creative communication in the age of screen media.

The prototype that led to Upgrade Soul

To celebrate the launch of Upgrade Soul, here’s a screen shot of an eleven year old prototype I made that sets artwork from Will Eisner’s “The Treasure of Avenue ‘C’” (a story from New York: The Big City) in two dynamically resizable panels.

Categories

Algorithms

Animation

Announcements

Authoring Tools

Comics

Digital Humanities

Electronic Literature

Events

Experiments

Exemplary Work

Flash

Flex

Fun

Games

Graphic Design

Interactive Design

iPhone

jQuery

LA Flash

Miscellaneous

Music

Opertoon

Remembrances

Source Code

Typography

User Experience

Viewfinder

Wii

Archives

July 2018

May 2018

February 2015

October 2014

October 2012

February 2012

January 2012

January 2011

April 2010

March 2010

October 2009

February 2009

January 2009

December 2008

September 2008

July 2008

June 2008

April 2008

March 2008

February 2008

January 2008

November 2007

October 2007

September 2007

August 2007

July 2007

June 2007